RedPajama replicates LLaMA dataset to build open source, state-of-the-art LLMs

4.9 (626) · $ 4.99 · In stock

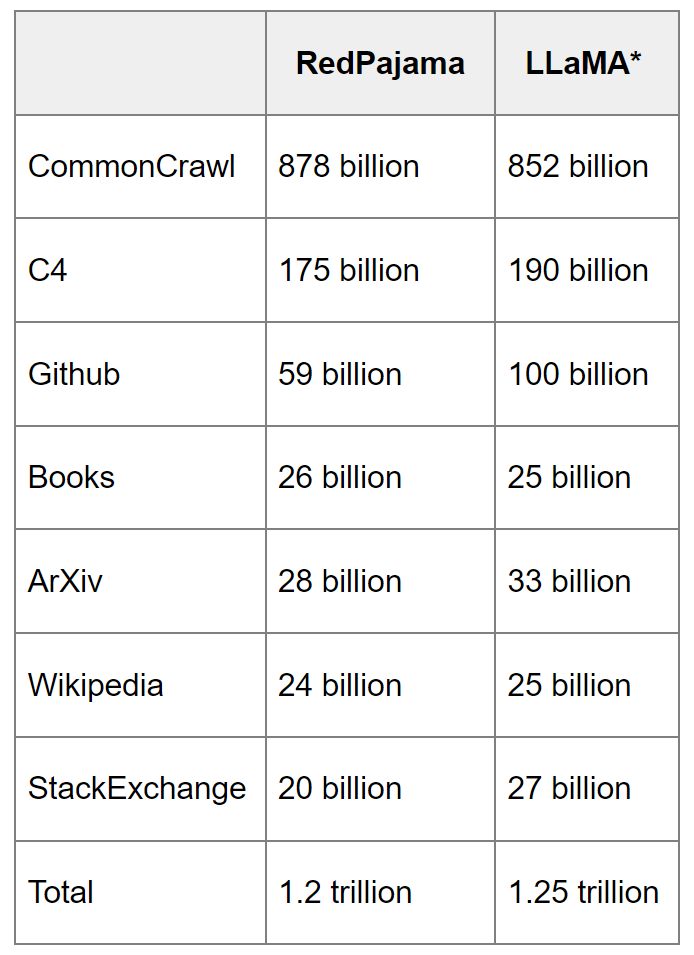

RedPajama, which creates fully open-source large language models, has released a 1.2 trillion token dataset following the LLaMA recipe.

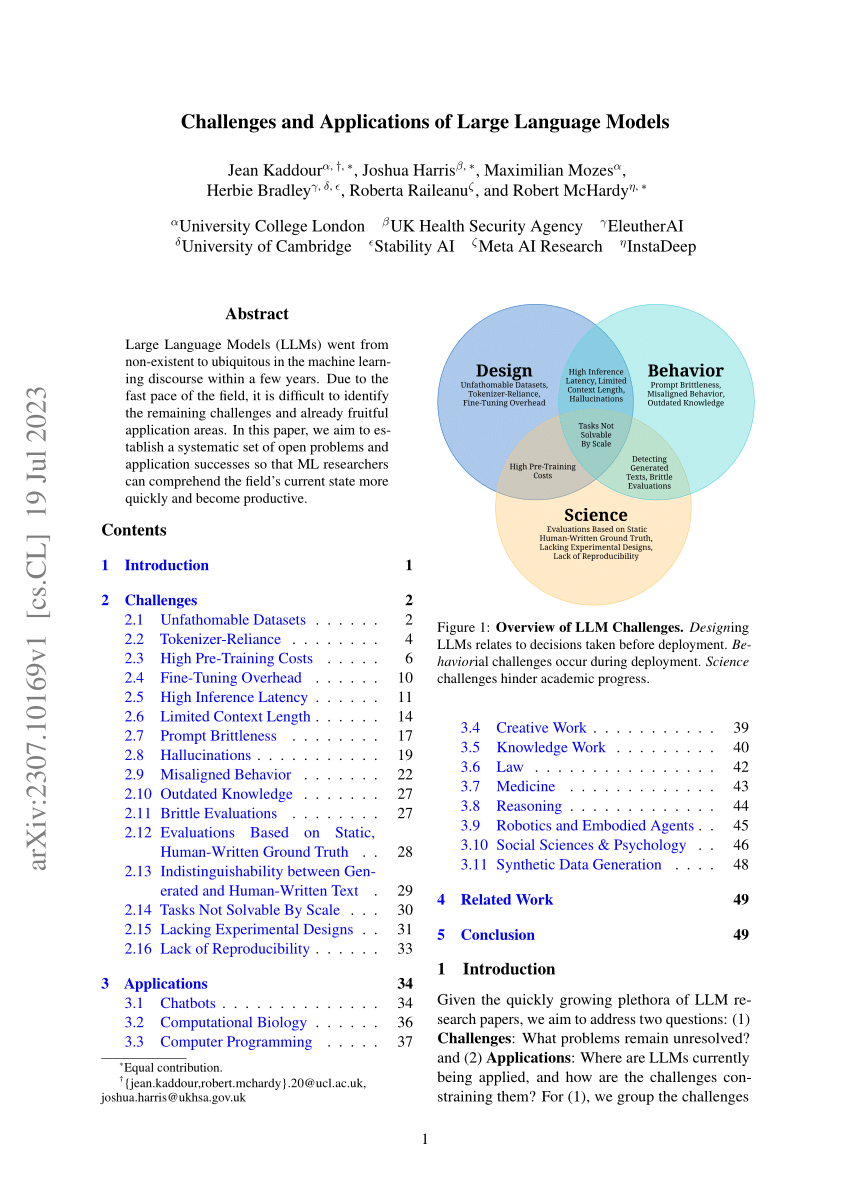

i1.rgstatic.net/publication/372468680_Challenges_a

Why LLaMA-2 is such a Big Deal

RedPajama 7B now available, instruct model outperforms all open 7B models on HELM benchmarks

GitHub - openlm-research/open_llama: OpenLLaMA, a permissively licensed open source reproduction of Meta AI's LLaMA 7B trained on the RedPajama dataset

What is RedPajama? - by Michael Spencer

🎮 Replica News

Vipul Ved Prakash on LinkedIn: RedPajama replicates LLaMA dataset to build open source, state-of-the-art…

Open-Sourced Training Datasets for Large Language Models (LLMs)

🎮 Replica News