What's in the RedPajama-Data-1T LLM training set

4.5 (616) · $ 16.00 · In stock

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

RedPajama-Data-v2: An open dataset with 30 trillion tokens for

Training on the rephrased test set is all you need: 13B models can

Web LLM runs the vicuna-7b Large Language Model entirely in your

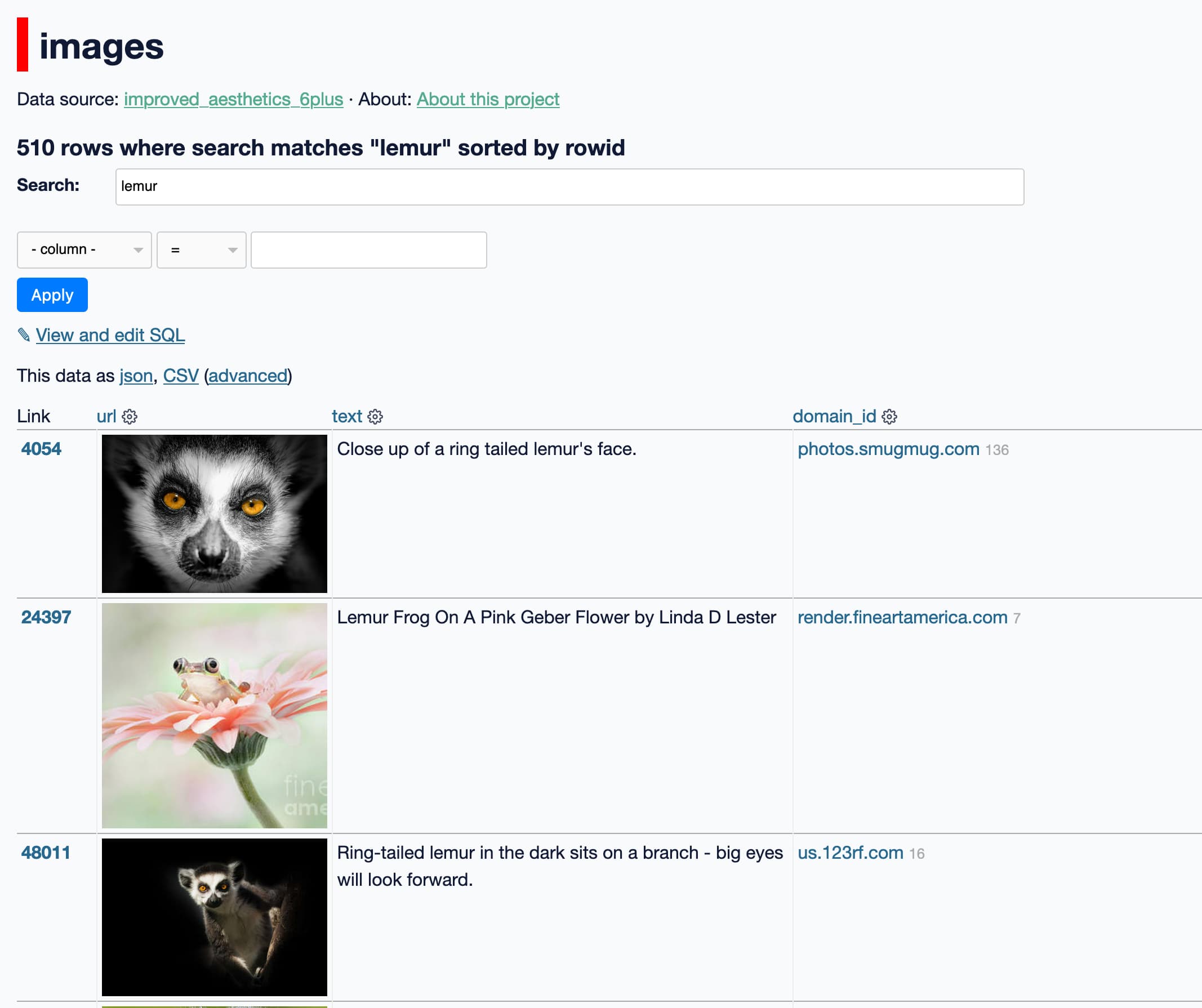

Exploring the training data behind Stable Diffusion

Skill it! A Data-Driven Skills Framework for Understanding and

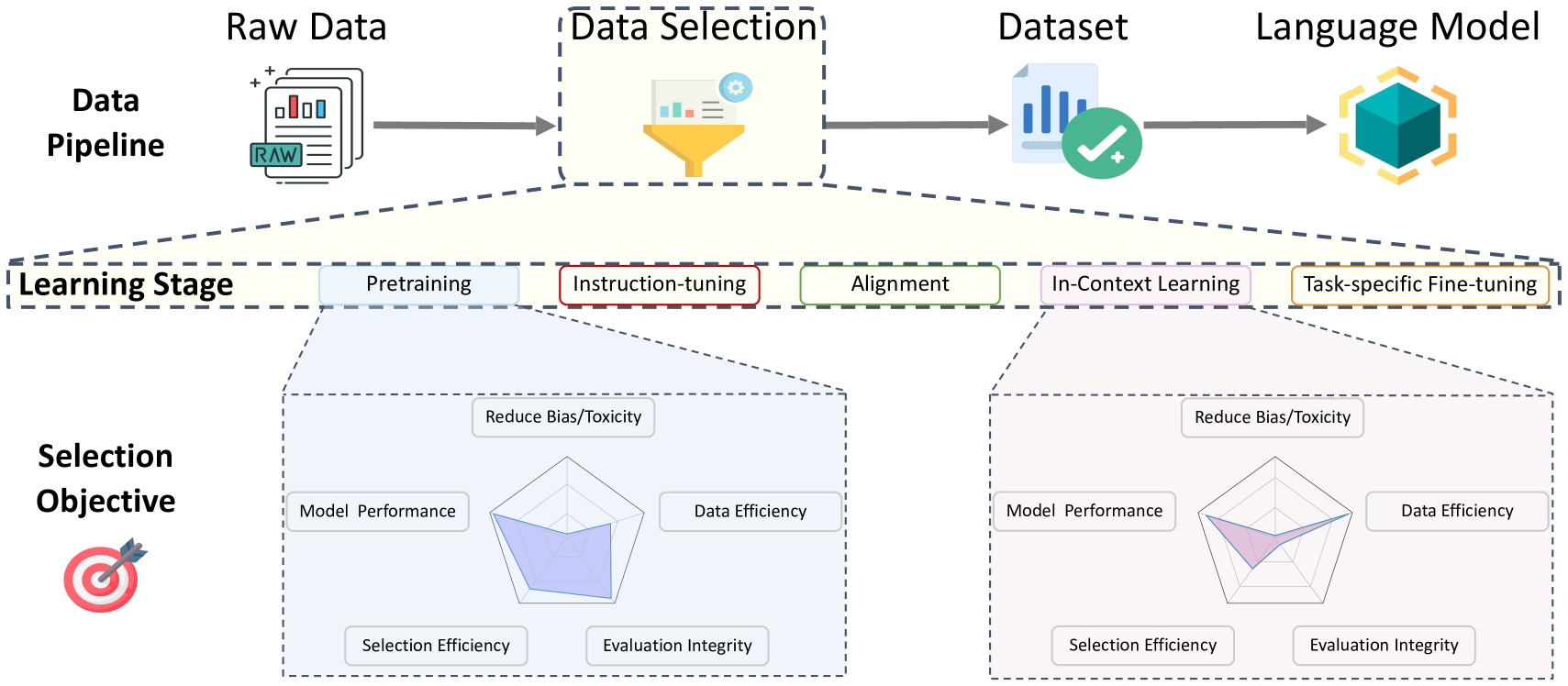

A Survey on Data Selection for Language Models

How we built better GenAI with programmatic data development

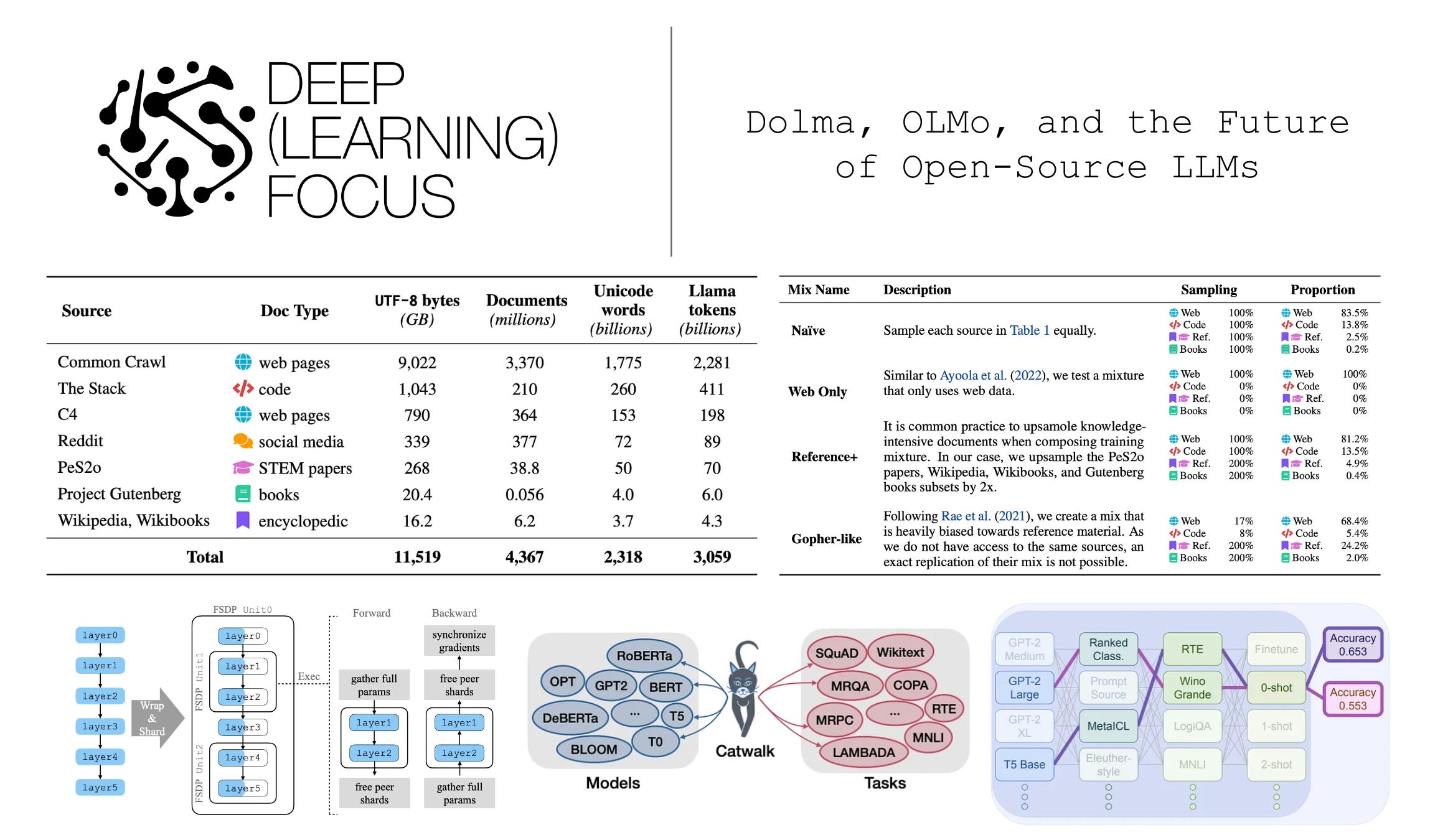

Dolma, OLMo, and the Future of Open-Source LLMs

Artificial Intelligence – Page 3 – Data Machina Newsletter – a

LaMDA to Red Pajama: How AI's Future Just Got More Exciting!

Ahead of AI #8: The Latest Open Source LLMs and Datasets

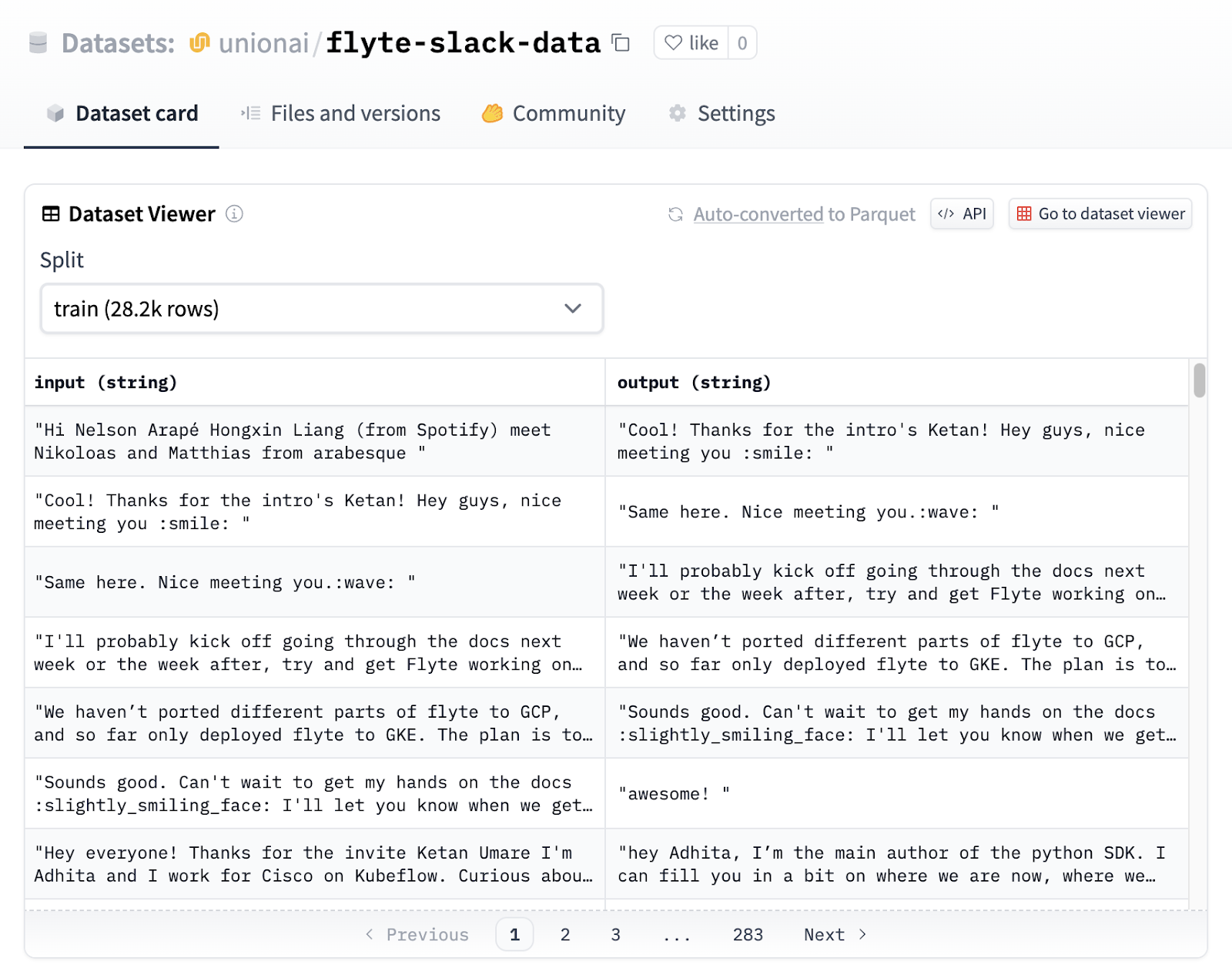

togethercomputer/RedPajama-Data-V2 · Datasets at Hugging Face

Fine-Tuning Insights: Lessons from Experimenting with RedPajama