Pre-training vs Fine-Tuning vs In-Context Learning of Large

4.8 (327) · $ 21.00 · In stock

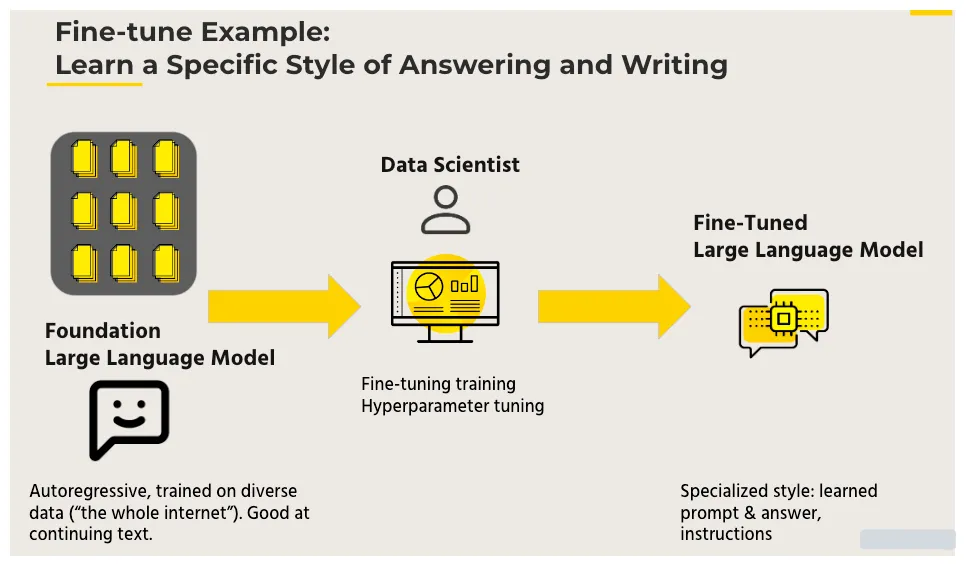

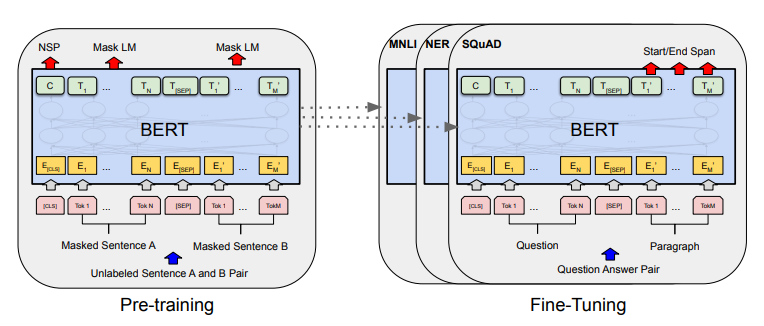

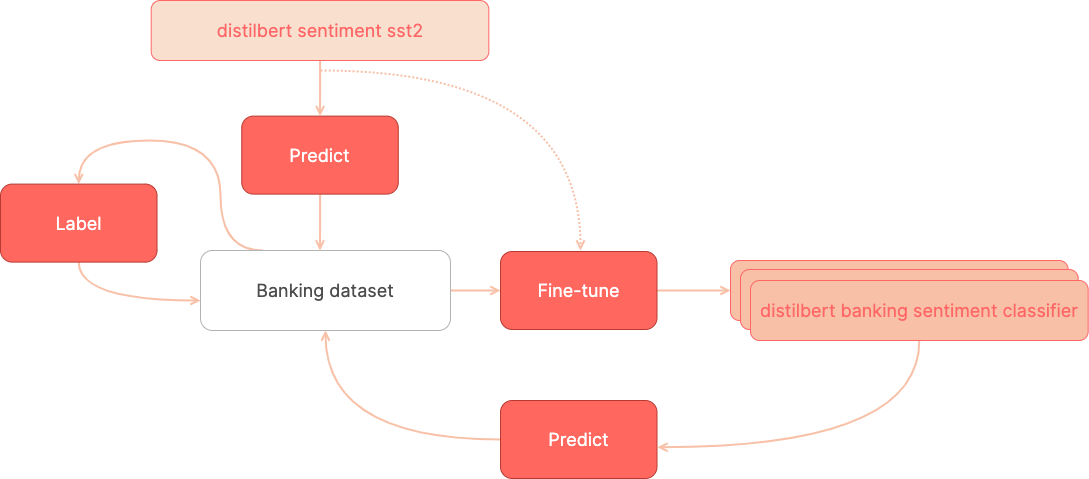

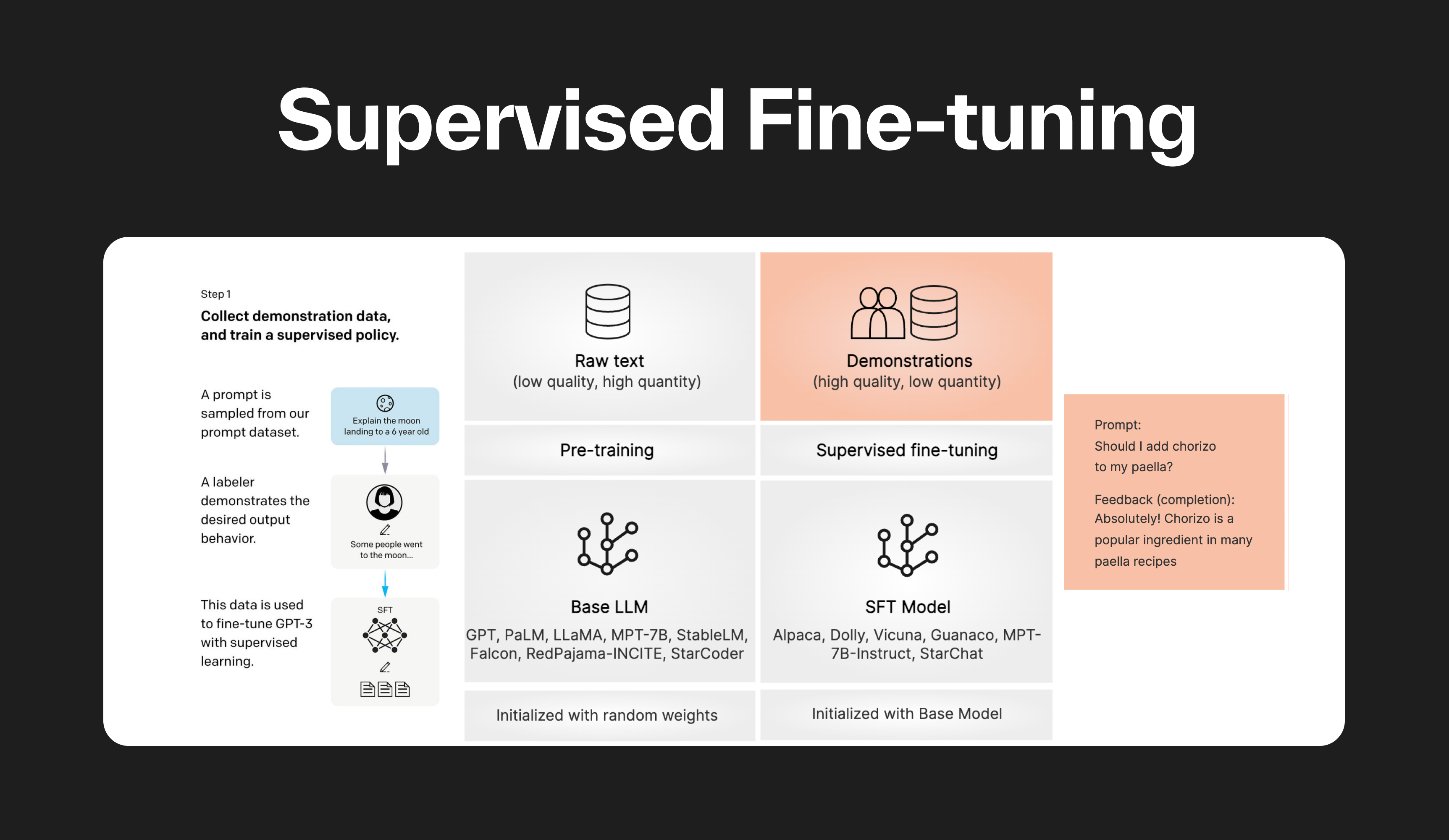

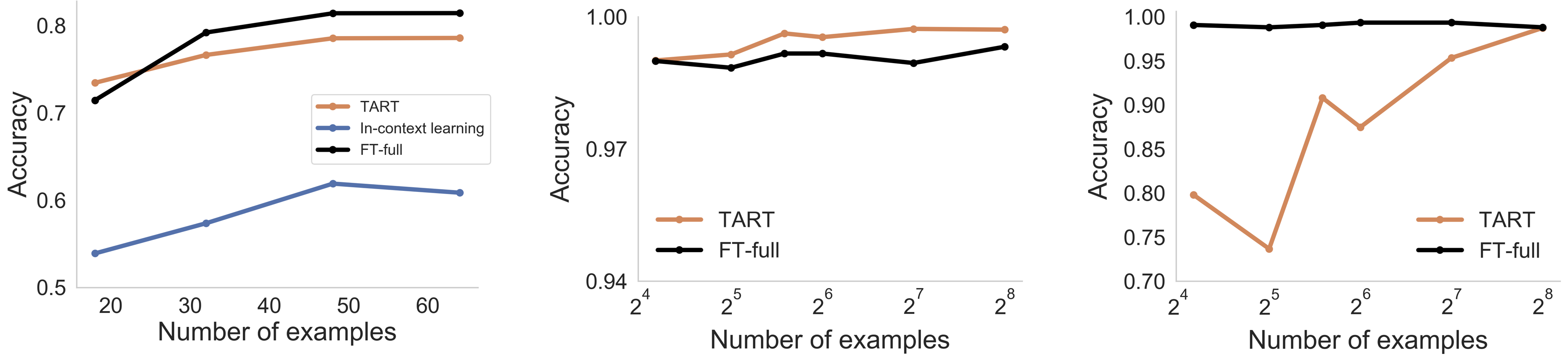

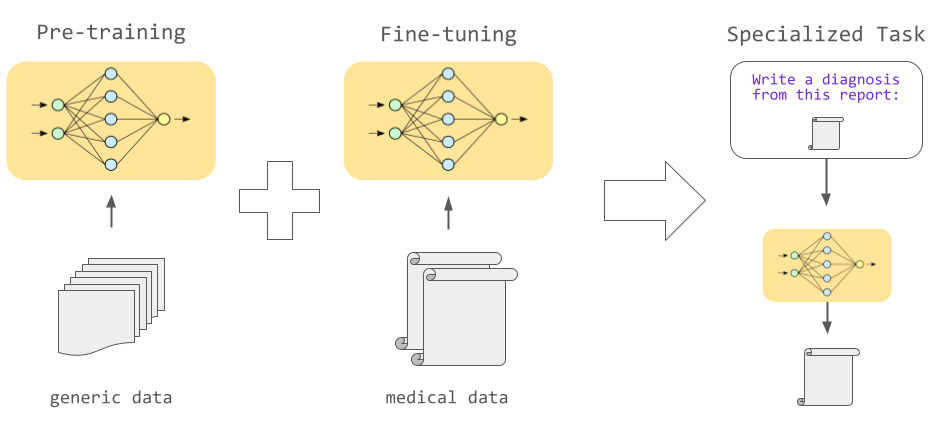

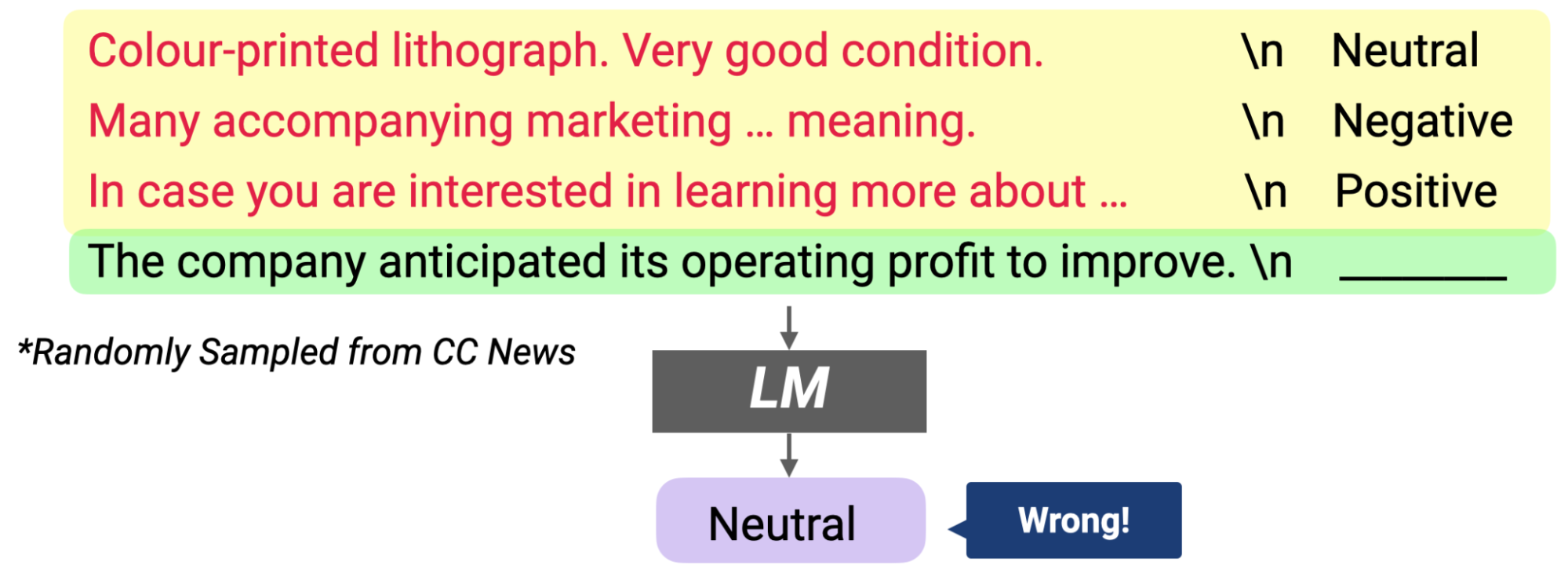

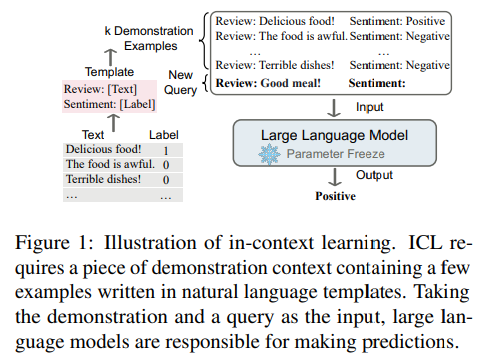

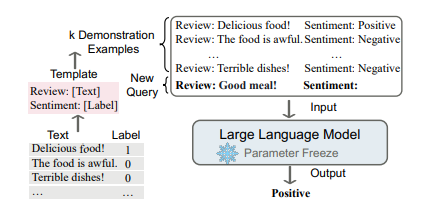

Large language models are first trained on massive text datasets in a process known as pre-training: gaining a solid grasp of grammar, facts, and reasoning. Next comes fine-tuning to specialize in particular tasks or domains. And let's not forget the one that makes prompt engineering possible: in-context learning, allowing models to adapt their responses on-the-fly based on the specific queries or prompts they are given.

Why is in-context learning lower quality than fine-tuning? And…what if it wasn't? · Hazy Research

The Full Story of Large Language Models and RLHF

How does in-context learning work? A framework for understanding the differences from traditional supervised learning

Articles Entry Point AI

All You Need to Know about In-Context Learning, by Salvatore Raieli

Pre-training Vs. Fine-Tuning Large Language Models

Fine Tuning vs. Prompt Engineering Large Language Models •

Articles Entry Point AI

In-Context Learning Approaches in Large Language Models, by Javaid Nabi

Pre-training, fine-tuning and in-context learning in Large Language Models (LLMs), by Kushal Shah